Quantified self apps and wearables seek to construct a seamless loop of information feedback between bodies and machines. But can that be a substitute for the much older, more humanistic goal of mental commitment?

This post has been cross-posted from the Reading Dashboards blog

One of the major selling points of the Jawbone UP, in distinction from many other wearables, is its ‘smart coach’. This is the tool that prompts the wearer to change her behaviour in small, manageable ways, on the basis of data that is collected on her behaviour and bodily signals. These ‘nudges’ focus on various factors in individual wellbeing, such as diet, exercise, sleep and mental habits. Often they are accompanied by references and links to scientific research, to grant them authority that perhaps one wouldn’t otherwise bestow upon a smartphone app.

The smart coach is an example of how everyday data analytics is shifting beyond the provision of dashboards or key performance indicators, towards recommendations for how to behave. Rather than provide the user with further information and choice, adding complexity to their decision-making, it seeks to remove the burden of responsibility from the individual. As Natasha Dow Schull has argued, “It is not really about self-knowledge anymore. It’s the nurselike application of technology.” The sharing of cognition and decision-making with ‘smart’ devices inevitably involves a form of mental outsourcing on the part of the human.

There may be various reasons why this is attractive. The rationalist, economistic answer is that it results in greater efficiency or utility. Obeying the ‘smart coach’ really will make you more productive, happier and healthier than struggling through your day unaided (of course this poses considerable questions about the values and ideologies that are latent in such goals). More psychologically plausible, I would suggest, is the sense that these technologies promise to care for you, reduce the existential burden of freedom. Our submission to them is less a reflection on their scientific authority – regardless of the evidence provided by the ‘smart coach’ – and more of their mystery and opacity, producing a sense of sublimity.

There may be various reasons why this is attractive. The rationalist, economistic answer is that it results in greater efficiency or utility. Obeying the ‘smart coach’ really will make you more productive, happier and healthier than struggling through your day unaided (of course this poses considerable questions about the values and ideologies that are latent in such goals). More psychologically plausible, I would suggest, is the sense that these technologies promise to care for you, reduce the existential burden of freedom. Our submission to them is less a reflection on their scientific authority – regardless of the evidence provided by the ‘smart coach’ – and more of their mystery and opacity, producing a sense of sublimity.

The great challenge of any behaviour change programme, be it a January diet or something more sophisticated, is how to make it stick. Equally, the threat hovering any wearable or quantified self app is that it will cease to be worn, looked at or taken into account. Bathroom scales get mothballed and so, likewise, do pedometer wristbands. It needs to be recognised that one of the great design challenges accompanying any wearable device or behaviour change app is merely to carry on being noticed, recognised, taken seriously at all. Ultimately, this is a greater problem for the technologist than the question of how the user should or shouldn’t live his life. First and foremost, smart devices insist, the user must be someone who continues to be the user.

I’ve became more deeply aware of this curiosity over recent months of using and wearing a Jawbone UP. Once the novelty of having my sleep, heart-rate and footsteps tracked had worn off, the ‘smart coach’ has become my primary way of interacting with the black rubber band around my wrist. It sets me challenges, offers suggestions, congratulates me for my achievements and so on. I once felt guilty for leaving the band at home, as if I owed it something. In some form of Stockholm syndrome, I worried that it would have nobody to evaluate or nudge, if no longer wrapped around my wrist.

But the underlying problem confronted by the designers dawned on me one day, when the ‘smart coach’ briefly descended into an absurd circularity: it told me that by going to bed earlier, I would be better able to take more steps the following day. And yet one of its main justifications for taking more steps is that this activity leads to better sleep! Given that sleep and walking are just about the only two things it is able to detect unaided by me, I began to wonder if the importance being attached to these two physical cycles was more a reflection on current technological affordances than on latest medical wisdom. By seeking to make me care about my sleep and footsteps, it was really seeking to make me care about Jawbone.

Around the same time, my 2-year-old daughter saw the wristband, pointed and said “what’s that, daddy?” I replied that it was a wristband. “What’s it for, daddy?”. To this, I really had no clue what to reply. I was beginning to suspect that its main function was to carry on being worn, which is really no function at all.

—

From a cybernetic perspective, communication is something that occurs in systems of human and non-human entities via feedback loops. Information flows through bodies and into other bodies, crossing interfaces as it goes. Every time it crosses an interface, be that between body and machine or image and eye, it is transformed via some type of analogy. Systems that are in a state of equilibrium are those in which the interfaces avoid disrupting the overall communication loop, but facilitate constant adaptation.

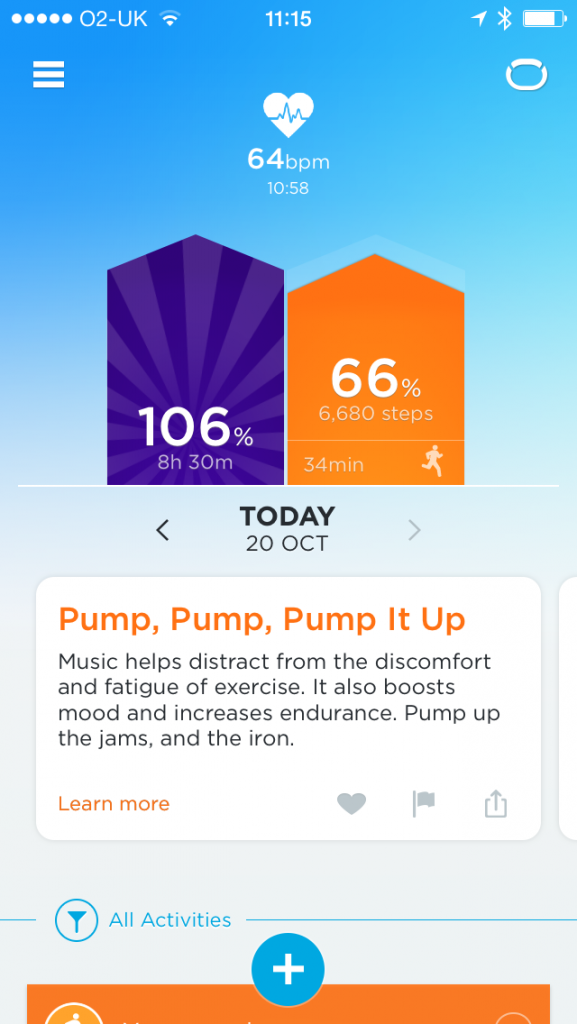

In the idealised world of Jawbone Up users, the communication loop would look as follows. The body sends off information in the form of rhythmical movements (pulse rate and steps), which travel across various interfaces into the wristband. These are sent to the smartphone via bluetooth, from where they can be transmitted to Jawbone servers for analysis, to build up a profile of the user over time (in addition to the database extracted from all Jawbone users).

A data dashboard (crafted to suit the physical properties of the human eye) is then generated for the user to see, along with ‘smart coach’ tips (crafted to suit the behavioural patterns of the human animal) to read. These are processed cognitively by the brain, leading to altered physical behaviour, which leads to different rhythmical movements to be detected by the wristband. A perfect loop of communication is achieved. The Jawbone user would thereby be constantly in a process of becoming something else, with the promise being that that ‘something else’ would be fitter, happier, more productive.

Amidst all of this, Jawbone may be marketed in terms of ‘wellbeing’ and ‘productivity’, but its business goal is ultimately very simple. Like Facebook, Google or a whole host of other platforms, it depends on being one of the various interfaces that is crossed in the course of an ordinary human day. If it fails to insinuate itself into such a position – that is, if it fails to replace other ways of interfacing with one’s body and environment – it cannot succeed.

One obvious vulnerability in this circuit is the interface between the dashboard/‘smart coach’ and the brain. This is why data visualisation is so critical: it is a weak spot in the cybernetic loop between human and environment. But there is another vulnerability too, namely between ‘brain’ (or ‘mind’, as metaphysicians might say) and behaviour. As Orit Halpern has argued compellingly, cybernetics has always been haunted by the ghost of humanistic categories – such as ‘mind’, ‘consciousness’, ‘agency’ – that it has sought to leave behind. The difficulty of ‘black-boxing’ the human, as a series of ‘inputs’ and ‘outputs’, makes it endlessly tempting to reintroduce humanistic explanations. In the case of Jawbone, something happens between ‘input’ and ‘output’ that in my humanistic vernacular I would call “not giving enough of a damn”.

This is what the Jawbone is most threatened by. Not the wristband breaking down. Not even the visualisations or ‘nudges’ being ignored (I continue to look at them out of curiosity). It’s that the user is simply insufficiently concerned with living a different life, or rather, insufficiently concerned with walking and sleeping as indicators of a different life. What I’ve discovered, over several months as a Jawbone user, is that no amount of data nor any degree of smartness on the part of the ‘coach’ is enough to make me care about something I don’t already care about. Sleeping and walking are empirically real, with plausible causal relations to my body and environment, but the leap to becoming matters of concern is something beyond the logic of information and feedback.

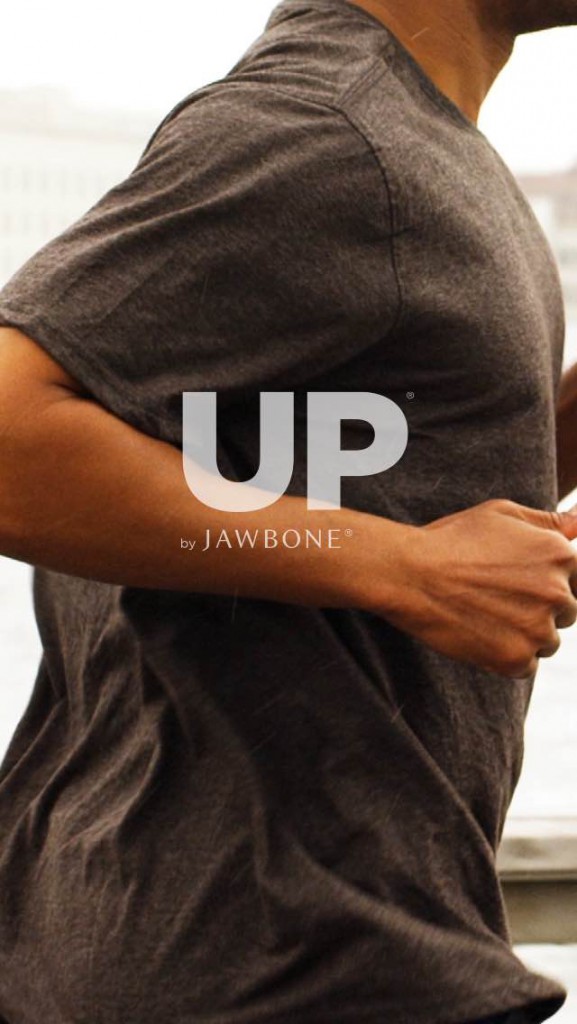

A glimpse of Jawbone UP’s ideal user is provided when one opens the iPhone app. An image appears of a tanned, muscular male body, jogging in a casual grey t-shirt. What is most telling about it is not the image itself, but the body part that is cropped out: the head. Here is a man so perfectly adapted to his environment, that he doesn’t even need to think about anything. If Jawbone’s feedback loop were ever to be perfected, there would be no need for conscious engagement with the world at all. The body would simply respond directly to the data.

In this, the distinction between rationality and reason becomes more obvious. As Halpern has documented in Beautiful Data, various forces in post-war design, behavioural science and computer science led to a stripping down of ‘reason’ to the status of ‘rationality’, in which the latter refers to a question of optimal decision-making under conditions of uncertainty (as per game theory, for example).

In this, the distinction between rationality and reason becomes more obvious. As Halpern has documented in Beautiful Data, various forces in post-war design, behavioural science and computer science led to a stripping down of ‘reason’ to the status of ‘rationality’, in which the latter refers to a question of optimal decision-making under conditions of uncertainty (as per game theory, for example).

‘Rationality’, by this standard, is something amenable to mathematical scrutiny, and which a machine is no less (and likely much more) capable than a human. It does not require the modern, liberal subject. It would be ascribed to brains rather than to minds, and might even be attributed to other body parts too, when they are processing information in an optimal fashion.

‘Reason’ implies something else altogether, which is potentially ethical in character. In Why Things Matter to People, Andrew Sayer argues that “we are beings whose relation to the world is one of concern”. This concern is both moral and reasonable, inasmuch as we provide reasons why certain things are valuable to us and other things are not, or why some things upset us and others cause us joy. These are things we reflect on and provide explanations for, not unlike empirical or aesthetic observations. I can tell you why I care about the things I care about, and equally why I don’t care all that much about the number of steps I take.

This deeply humanistic view is palpably incompatible with a cybernetic one. It implies a type of ‘interface’ that is too unpredictable to be controllable: the interface of dialogue. Purveyors of smart technologies are more comfortable imagining cities, bodies or social relations mediated by something other than language, in the cultural sense of that term. But in the absence of a discursive culture asserting the importance of, say, sleeping and walking, it is impossible for people to become concerned by data or devices which analyse those things. This is the bind that that the designers of such tools must find themselves in: to interface with us as humans, with all of the foibles and failures that go with that, or purely as bodies, but which are incapable of experiencing the ‘commitment’, ‘concern’ or ‘giving a damn’ that is needed for technology to become integral to everyday life.

Discuss this article